A Draft & Notes Page | only draft

Quick Notes

>>SSL error on Mac Python - sudo bash /Applications/Python 3.6/Install Certificates.command (12/22/2018)>>stopwords2.txt (11/03/2018)

>>take care of a = "doesn\u2019t" - char = a.decode('unicode-escape') print char --> doesn't (11/03/2018)

>>File "/usr/bin/pip", line 9, in

ImportError: cannot import name main

Soluion: which pip

sudo cp pathname(e.g./usr/local/bin/pip) /usr/bin/ (6/20/2018)

>>Vim Search & replace(:%s/foo/bar/g ) (6/4/2018)

>>SSL error downloading NLTK data (nltk.download()) (6/4/2018)

>>copy all local files in dir to server "scp -r /home/user/html/* root@server_address:/mnt/volume-nyc1-07/crawler/crawling" (link)<< (4/18/2019)

>>Setup MS X Remote Desktop - sudo apt-get install xrdp xfce4 (3/24/2018)

>>Mongodb enable authentication (Enable Access Control)<< (11/27/2017)

>>Avoid Sublime Text 3 beta update to 3.0 verion --> "update_check": false<<

>>CS498rk1: How to Install and Use the Linux Bash Shell on Windows 10<< (08/29/2017)

>>Install MongoDB via brew: brew install mongodb<< (08/28/2017)

>>Install brew on macOS Sierra<< (08/28/2017)

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

Update: 8-27-2017 (End of 2017 Summer)

Update: 8-27-2017 (End of 2017 Summer)>>Mac Thunderbolt固件1.2无法更新终端解决办法: softwareupdate --ignore ThunderboltFirmwareUpdate1.2<< (08/27/2017)

>>各排序算法 in C<< (04/7/2017)

>>How to Run Graphical Linux Desktop App on Win 10’s Bash Shell<< (export DISPLAY=:0) (01/18/2017)

Update: 12-31-2016 (The end of 2016 & happy new year! @_@)

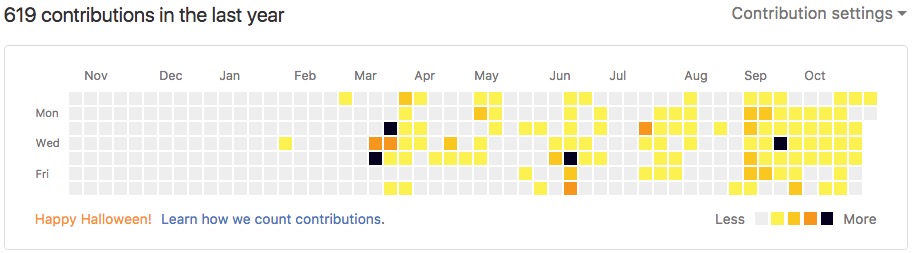

Update: 12-31-2016 (The end of 2016 & happy new year! @_@) Update: 10-31-2016 (Happy Helloween!)

Update: 10-31-2016 (Happy Helloween!)>>Ubuntu Crash: 1. Ctrl + Alt + (1 of F1-F6), 2.Alt + Prt Sc + (TYPE:reBoot)<< (10/26)

>>Reset Apple Bluetooth Keyboard for macOS<< (10/13)

>>Java for macOS 11.12 (Chinese Version)<< (10/13)

>>If use mongoose, change collection name to plural(复数, this is shit!)<< (9/16)

Update: 9-15-2016 (GitHub Changed Web UI)

Update: 9-15-2016 (GitHub Changed Web UI)>>How to restart WiFi connection on Linux? (ifconfig wlp4s0 down/up, service network-manager restart)<< (9/8)

>>How to find your IP address?<< (9/8)

>>How to install PyCharm on Linux<< (9/8)

>>How to setup MongoDB on Ubuntu and Linux Mint<< (9/8)

>>Ubuntu on Win: C:\Users\[W_un]\AppData\Local\lxss\home\[U_un]<< (9/5) (Sample)

>>Examples of Emails to a Prof.<< (8/23)

>>如何与教授用电子邮件联系<< (7/20)

>>使用NodeJS和AngularJS创建一个TODO的单页网站 << (7/20)

>>Why doesn't “cd” work in a bash shell script? How?<< (7/20)

>>ThinkPad YOGA 11e 重装Win10后 触摸屏失效?<< (7/19)

>>Sublime Text 编辑神器(Win中文离线版)<< (7/19) (官网)

>>Asus T100HA Problem turning on<< (6/30)

>>下载谷歌浏览器(Win中文离线版)<< (6/30) (官网)

>>下载火狐浏览器47(Win中文离线版)<< (6/22) (官网)

>>下载Avira小红伞免费杀毒软件(Win中文离线版)<< (6/22) (官网)

>>Win10搜索web栏和windows怎么去掉?<< (6/18)

>>Windows 7连上无线信号上不了网?<< (6/18)

Update: 6-16-2016

Update: 6-16-2016

>>点击Win7升级Wini10<< (5/?) 点击“立即升级” - “保存”(任意位置或者桌面) - 然后点击浏览器左下角点开下载的 - 是 - (正在检查更新)- 然后就自动下载更新

Basic Linux Command

when you cannot start mongod: ps aux | grep mongod, then kill the ID contains mongo

Click to download.new_ubuntu_OS_setup_file (GNOME 16.04)xx@oo: chmod a+x init.sh

xx@oo: ./init.sh (simply once you type "./i", then click on button "Tab")

Or... you can simply copy & paste the following code (but the web might be an older version of "init.sh" file):

# create root password, you can comment out of it after you alread create a pwd for root

sudo passwd

# update system

sudo apt-get update && sudo apt-get upgrade -y

# apt libs

sudo apt-get install -y python python3-pip python-numpy python-scipy python-matplotlib nodejs-legacy mongodb-server vim gcc g++ nodejs npm build-essential libssl-dev curl git ruby clang python-pip python-scrapy python-bs4 python-lxml python-html5lib python-lucene python-dev libpuzzle-dev build-essential cmake nginx openssh-server openvpn easy-rsa tcl tk tcllib libgd2-xpm-dev (libgd-dev) php (or php5)

# for X-remote

apt-get install -y xrdp xfce4

# apt software

sudo apt-get install -y xrdp xfce4 gedit chromium-browser eclipse gdebi gimp blender stardict vlc smplayer dosbox unzip emacs audacity filezilla transmission comix openshot cheese deluge clamav clamtk virtualbox visualboyadvance-gtk

# pip libs

Download >>Python<<

python file.py

sudo pip3 install -U statsmodels ipykernel tqdm seaborn theano parameterized scikit-cuda torch torchvision Cython CherryPy joblib dtaidistance tensorflow gensim setuptools scikit-learn pandas beautifulsoup4 pymongo selenium nltk numpy scrapy matplotlib lxml scipy tensorflow ipython jupyter keras statsmodels patsy ggplot twitter tweepy google-api-python-client

python3 file.py

sudo pip install -U statsmodels ipykernel tqdm seaborn theano parameterized scikit-cuda torch torchvision Cython CherryPy joblib dtaidistance tensorflow gensim setuptools scikit-learn pandas beautifulsoup4 pymongo selenium nltk numpy scrapy matplotlib lxml scipy tensorflow ipython jupyter keras statsmodels patsy ggplot twitter tweepy google-api-python-client

sudo pip install -U setuptools && sudo pip3 install -U setuptools; sudo pip install -U scikit-learn && sudo pip3 install -U scikit-learn;sudo pip install -U pandas && sudo pip3 install -U pandas;sudo pip install -U beautifulsoup4 && sudo pip3 install -U beautifulsoup4;sudo pip install -U pymongo && sudo pip3 install -U pymongo;sudo pip install -U selenium && sudo pip3 install -U selenium;sudo pip install -U nltk && sudo pip3 install -U nltk;sudo pip install -U numpy && sudo pip3 install -U numpy;sudo pip install -U scrapy && sudo pip3 install -U scrapy;sudo pip install -U matplotlib && sudo pip3 install -U matplotlib;sudo pip install -U lxml && sudo pip3 install -U lxml;sudo pip install -U scipy && sudo pip3 install -U scipy;sudo pip install -U tensorflow && sudo pip3 install -U tensorflow;sudo pip install -U gensim && sudo pip3 install -U gensim;sudo pip install -U ipython && sudo pip3 install -U ipython;sudo pip install -U jupyter && sudo pip3 install -U jupyter;sudo pip install -U statsmodels && sudo pip3 install -U statsmodels;sudo pip install -U keras && sudo pip3 install -U keras;sudo pip install -U ggplot && sudo pip3 install -U ggplot;sudo pip install -U google-api-python-client && sudo pip3 install -U google-api-python-client;sudo pip install -U networkx && sudo pip3 install -U networkx

sudo pip install -U setuptools

sudo pip install -U scikit-learn (machine learning)

sudo pip install -U pandas

sudo pip install -U beautifulsoup4

sudo pip install -U pymongo

sudo pip install -U selenium

sudo pip install -U nltk (NLP)

sudo pip install -U numpy

sudo pip install -U scrapy

sudo pip install -U matplotlib

sudo pip install -U lxml

sudo pip install -U scipy

sudo pip install -U tensorflow (machine learning and deep neural networks)

sudo pip install -U gensim (machine learning and deep neural networks)

sudo pip install -U ipython

sudo pip install -U jupyter (jupyter notebook)

sudo pip install -U keras (deep learning)

sudo pip install -U statsmodels

sudo pip install -U patsy

sudo pip install -U ggplot

sudo pip install -U google-api-python-client

-------

sudo pip install -U grass (grass GIS)

# npm software

sudo npm express forever -gd

Ubuntu 16.04: How to Move Unity’s Left Launcher to The Bottom

gsettings set com.canonical.Unity.Launcher launcher-position Bottom

revert back:

gsettings set com.canonical.Unity.Launcher launcher-position Left

Mac Softwares (Not in the App Store)

Download

>>Scroll Reveser (set different direction for Mouse and Trackpad)<< |

>>Go2Shell (Website)<< |

>>MacTex<< |

>>Python<< |

>>Python3.8.8<< |

>>Python2.7.18<< |

>><Anaconda<< |

>>Nodejs<< |

>>KugouMusic<< |

>>Github<< |

>>Sublime Text 2<< |

>>Sublime Text 3<< |

>>Atom<< |

>>Visual Studio Code<< |

>>MongoHub<< |

>>Android File Transfer<< |

>>Logitech Options<< |

>>Firefox<< |

>>Chrome<< |

>>VLC<< |

>>TeamViewer<< |

>>Paintbrush2<< |

>>MySQL Workbench<< |

>>Java<< |

>>JDK8<< |

>>PyCharm<< |

>>JetBrain Student Reg<< |

>>VirtualBox<< |

>>Dropbox<< |

>>name<< |

Other Linux Softwares

Dropbox |

有道词典 |

Sublime Text 3(S/N) |

Chrome |

WPS |

Slack |

JetBrains Products

Quick Links

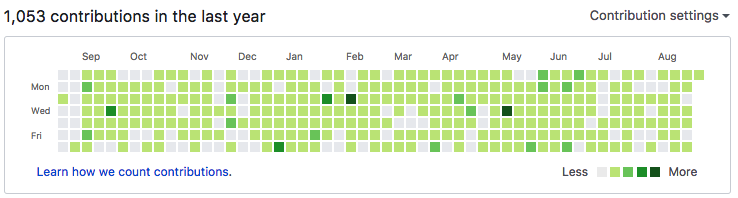

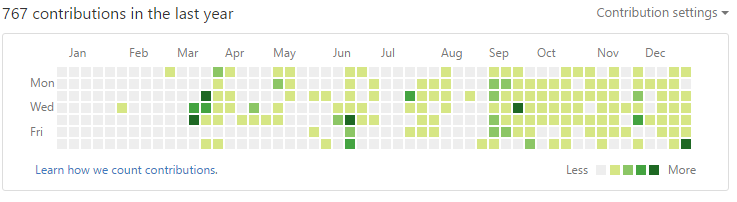

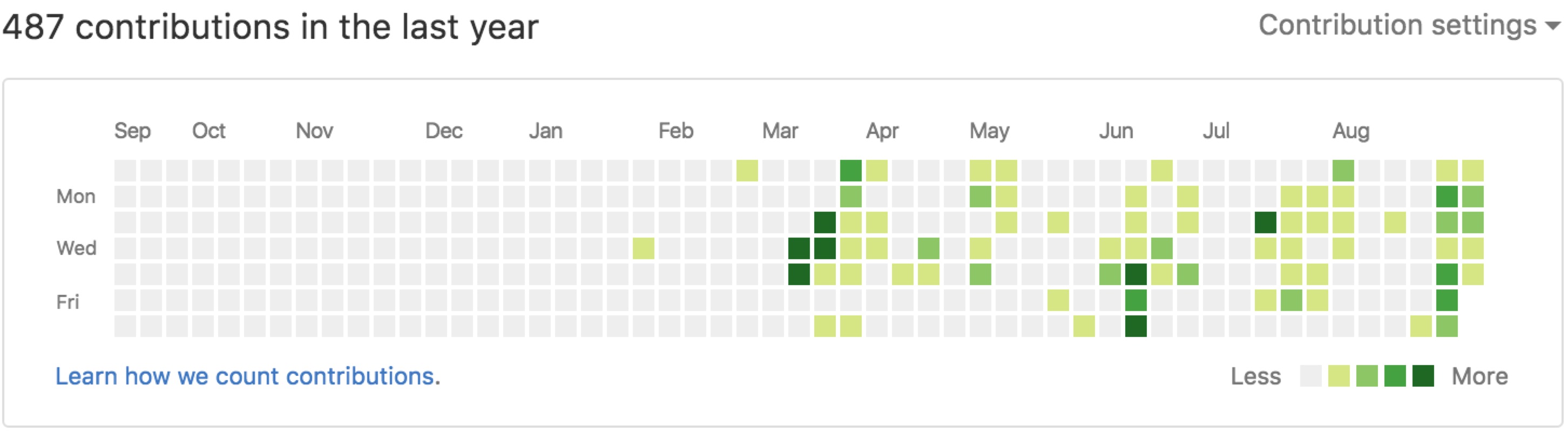

- Go to my Github or Homepage

- ChatGPT |

Claude3 |

TradingView

- 北美省钱快报

- 美国打折网 21US Deals

- Slickdeals

- Amazon | eBay | Target

- (BC | DBK, OLE, YF)

- YouTube

- 哔哩哔哩 | 西瓜视频

- 豆瓣电影 | 豆瓣电视剧

- cnBeta | IT之家

- Engadget | Autoblog

- Gmail | Google Calendar

- Google Drive | 百度网盘

- Retro Game Handhelds

- 天地劫主页

- 天地劫PVE排名,魂石,饰品 24.08

- 天地劫NGA论坛

- 天地劫Wiki英灵满级属性表

- Linkedin

- Rakuten

- Get a A Plain Page

常用软件 Software

Google Chrome 谷歌浏览器 (Mac, Win, Linux)7-zip 解压缩软件 (Mac, Win, Linux)

VLC 视频播放软件 (Mac, Win, Linux, Video Player)

Visual Studio Code (Mac, Win, Linux)

Sublime Text (Mac, Win, Linux)

Atom (Mac, Win, Linux)

Github Desktop (Mac, Win)

Anaconda (Mac, Win, Linux)

R Studio (Mac, Win, Linux)

Slack (Mac, Win, Linux)

Zoom Workspace (Mac, Win, Linux)

LibreOffice (Mac, Win, Linux | 中文)

WPS Office (Mac, Win, Linux)

永中 Office (Win, Linux)

有道词典 (Mac, Win, Linux)

Scroll Reverser(Mac, reverse Mouse and Trackpad) (Mac ONLY)

Go2Shell (Mac ONLY, Website)

Telegram (Mac, Win, Linux)

TeamViewer (Mac, Win, Linux)

Firefox 火狐浏览器 (Mac, Win, Linux)

Logitech G HUB (Mac, Win, Linux, G鼠标按键设定)

Android Filetransfer (Mac, Win, Linux)

Steam (Win, Mac, Linux)

Epic Game (Win, Mac)

Blizzard Battle.net (Win, Mac)

AWS S3 & Kubectl

list pods

kubectl get pods

kubectl get pods -n [partial-pods-name]

create a pod

kubectl create -f /[dir]/[filename].yml

connect to pod

kubectl exec -t -i [pod name] -- bash

delete a pod

kubectl delete pod [pod name]

S3 operations

aws s3 ls/cp/rm/mv | more

grep

Search keywords in local files

grep -Hr "Message" . /

Hadoop/HDFS

"Breif Instruction"

hadoop HDFS常用文件操作命令

"ls: check files or details"

hadoop fs -ls /

ehadoop fs -ls -R /

"put: local to hdfs"

hadoop fs -put < local file > < hdfs file > (hdfs file cannot exist)

"get: hdfs to local"

hadoop fs -cat < hdfs file > < local file or dir>

"cat: look at the file"

hadoop fs -get < hdfs file > < local file or dir>

"mkdir: create a new dir"

hadoop fs -mkdir < hdfs file > < local file or dir>

"rm: remove files/folders from hdlf"

hadoop fs -rm < hdfs file > ...

hadoop fs -rm -r < hdfs dir>...

"du: size of files"

hadoop fs -du < hdsf path>

hadoop fs -du -s < hdsf path>

hadoop fs -du -h < hdsf path>

"text: text output of files"

hadoop fs -text < hdsf file>

Spark/PySpark

"Run on pyspark 3.0 with graphframes 0.8.0"

$SPARK_HOME/bin/pyspark --packages graphframes:graphframes:0.8.0-spark3.0-s_2.12

To install package type "/Users/USERNAME/anaconda3/bin/pip install ir_evaluation_py"

"install pyspark"

PySpark on macOS: installation and use

"vi ~/.bashrc"

export PATH=/Users/USERNAME/anaconda3/bin:$PATH

export JAVA_HOME=/Library/java/JavaVirtualMachines/jdk1.8.0_162.jdk/contents/Home/

export JRE_HOME=/Library/java/JavaVirtualMachines/openjdk-14.0.1.jdk/Contents/Home/

export SPARK_HOME=/usr/local/Cellar/apache-spark/3.0.0/libexec

export PATH=/usr/local/Cellar/apache-spark/3.0.0/bin:$PATH

export PYSPARK_PYTHON=/usr/local/bin/python3

export PYSPARK_DRIVER_PYTHON=jupyter

export PYSPARK_DRIVER_PYTHON_OPTS='notebook'

Conda

"install Anaconda3"

Official Download

Install on Mac

"source Anaconda3"

vi ~/.bashrc

export PATH=/Users/USERNAME/anaconda3/bin:$PATH

Terminal

"show disk useage"

du -h --max-depth=0 * | sort -hr

df

"save output(yarn) to text"

python xxxx.py &> output.txt

yarn logs --applicationId xxx &> logs.txt

MongoDB

"initialize MongoDB"

sudo mkdir -p /data/db

sudo chmod g+w /data/db

sudo mongod &

mongo

"Export":

exit mongodb

mongoexport --db test --collection traffic --out sample.json

"Import":

exit mongodb

mongoimport --db test --collection traffic --file sample.json

"find one":

db.collection_name.find()

"find sth":

db.collection_name.find({field_name: "value"})

"drop":

db.dropDatabase() / db.collection_name.drop()

"count":

db.collection_name.find().count()

"distinct":

db.collection_name.distinct("field_name")

"delete":

db.collection_name.remove({field_name: "value"})

GitHub

"update & push":

git pull

git add * / git rm filename.type

git commit -m "sth"

git push

Delete the folder locally and then push to github ex:

rm -rf folder

git add .

git commit -a -m "removed folder"

git push origin master

Click to download SIGGRAPH demo files done

Desktop Environment

sudo apt-get install xorgXFCE:

sudo apt-get install xfce4

sudo apt-get install xubuntu-desktop

IceWM:

sudo apt-get install icewm iceconf icepref iceme icewm-themes

OpenBox:

sudo apt-get install openbox obconf openbox-themes

fluxBox:

sudo apt-get install fluxbox fluxconf

LXDE:

sudo apt-get install lxde

Recons. Demo

Visitors

Copyright © Jinda Han. 2015-2021. All Rights Reserved.